Back to Articles Hub Homepage Portfolio Hub About Me QA Chronicles

Functional testing: the boring basics that catch real bugs

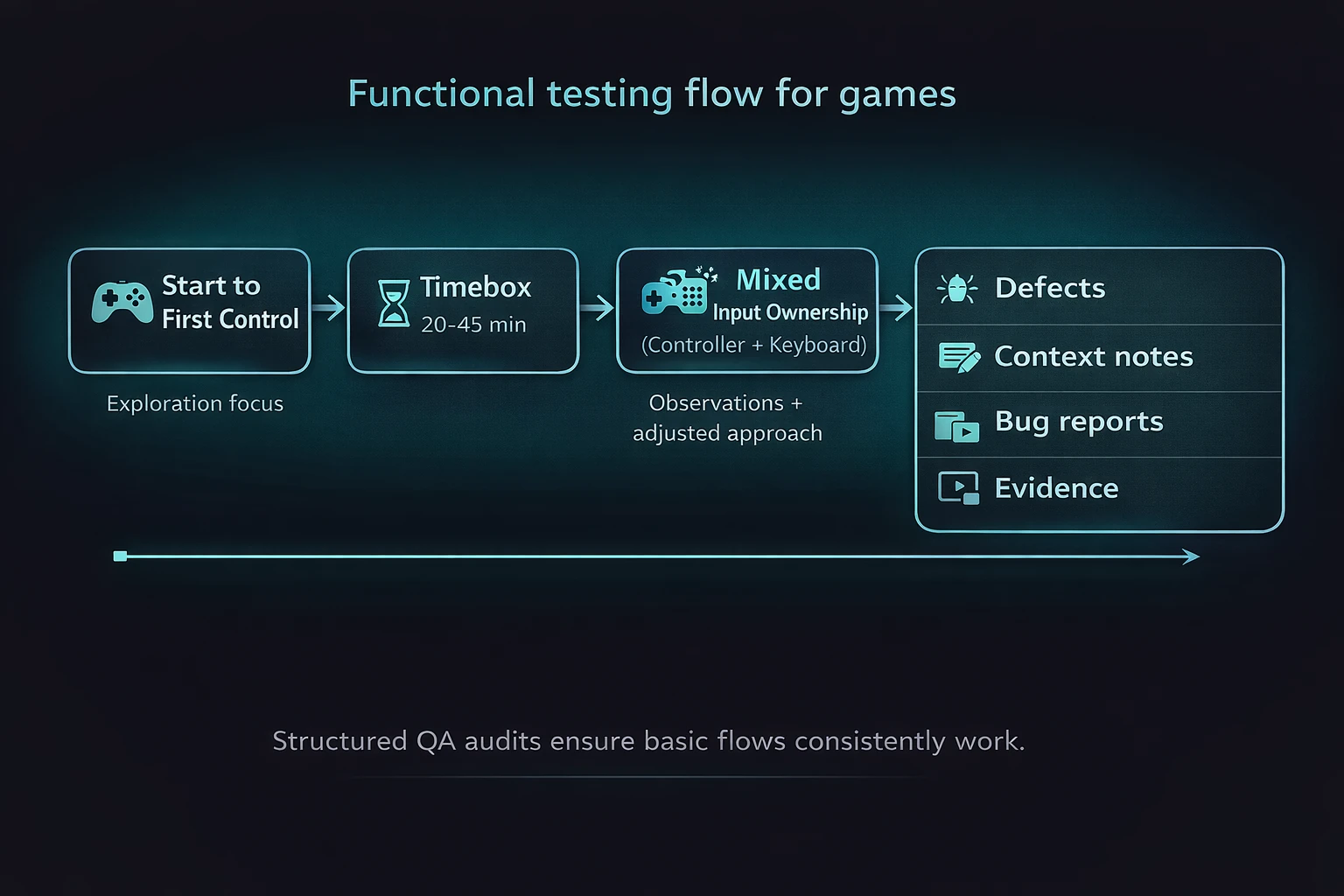

This is an article on my functional testing workflow for manual QA, backed by a real one-week solo pass on Battletoads (PC, Game Pass). It exists to show what I test first, why those checks matter, and how I capture evidence that makes defects reproducible.

TL;DR

- What it is: a functional testing workflow for timeboxed solo passes.

- Backing project: Battletoads on PC (Game Pass), one-week pass (27 Oct to 1 Nov 2025).

- Approach: validate start-to-control and Pause/Resume first, then expand where risk appears (often input and focus).

- Outputs: pass/fail outcomes, reproducible defect reports, and short evidence recordings supporting each finding.

Functional testing workflow context and scope

This article is grounded in a self-directed portfolio pass on Battletoads (PC, Game Pass), build 1.1F.42718,

run in a one-week timebox. Test focus was core functional flows and mixed input ownership (controller plus keyboard),

with short evidence clips captured for reproducibility.

What functional testing is (in practice)

For me, functional testing is simple: does the product do what it claims to do, end-to-end, without excuses or interpretation? I validate core flows, confirm expected behaviour, and write issues so a developer can reproduce them without guessing.

The mistake is treating functional testing as “easy” and therefore less valuable. It is the foundation. If the foundation is cracked, everything built on top of it fails in more complicated ways.

Functional testing outputs: pass/fail results with evidence

Clear outcomes: pass or fail, backed by evidence and reproducible steps. Not vibes. Not opinions.

The two flows I verify first

-

Start to first control

The first minute determines whether the product feels broken. If “New Game” does not reliably get you playing, nothing else matters. In Battletoads, I validate this from Title into Level 1 and through the first arena transition before expanding scope. -

Pause and Resume

Pause stresses state, focus, input context, UI navigation and overlays. If Pause is unstable, you get a stream of defects that look random but are not. In Battletoads (PC), this surfaced early as keyboard and controller routing issues around Pause and Join In.

Input ownership testing: controller and keyboard hand-off

Mixed input is a feature, not an edge case. When a controller is connected and a keyboard is used, behaviour must remain predictable.

- Pause must open consistently.

- Navigation must respect the active input method.

- Confirm and back must not silently stop responding.

- Hand-off must not route to the wrong UI or disable input.

I treat keyboard and controller hand-off as a dedicated test area because it produces high-impact, easily reproducible defects. In this Battletoads pass, mixed input could misroute actions (for example, Resume opening Join In) and temporarily break controller response.

Common pattern: mixed input causes menu focus bugs

Controller connected plus keyboard input plus menu open equals focus bugs. Easy to reproduce. Easy to prove. Easy to fix once isolated.

Bug evidence: what I capture and why

I favour short video clips (10 to 30 seconds) and only use screenshots when they add clarity. The goal is to make the defect obvious without forcing someone to scrub a long recording.

- Video: shows timing, input, and incorrect outcome together.

- Screenshot: supports UI state, text, or configuration.

- Environment: platform, build/version, input device, display mode.

If the evidence cannot answer what was pressed, what happened, and what should have happened, it is not evidence.

How I timebox a one-week pass

- Day 1: Smoke testing and baseline flow validation.

- Days 2 to 4: Execute runs, expand where risk appears, log defects immediately.

- Days 5 to 6: Retest, tighten repro steps, confirm consistency.

- Day 7: Summarise outcomes and document learnings.

Practical note: I start each session with a short baseline loop (load, gain control, Pause, resume) before deeper checks. It catches obvious breakage early and prevents wasted time.

Testing oracles used for functional verification

- In-game UI and outcomes: observable behaviour of core flows (control, progression, Pause/Resume).

- Controls menu bindings: used as an oracle for expected key behaviour (for example, Esc and Enter bindings).

- Consistency across repeated runs: behaviour confirmed via reruns to rule out one-off variance.

Examples from the Battletoads functional pass

Example bug pattern: mixed input misroutes Pause and overlays

With a controller connected on PC, Pause opened and closed reliably via controller Start. Keyboard interaction on Pause could be ignored or misrouted into Join In, and in one observed case the controller became unresponsive until the overlay was closed. Short evidence clips were captured to show input, timing, and outcome together.

Micro-charter: mixed input ownership around Pause and overlays

Charter: Mixed input ownership around Pause and overlays (controller plus keyboard).

Goal: Confirm predictable focus, navigation, and confirm/back actions under common PC setups.

Functional testing takeaways (flows, input, evidence)

- Functional testing finds high-impact defects early because it targets the systems everything else relies on.

- Pause and overlays are reliable bug generators because they stress state and input routing.

- On PC, mixed input should be treated as a primary scenario, not an edge case.

- Short evidence clips reduce repro ambiguity and speed up triage.

- Repeating the same steps is how “random” issues become diagnosable patterns.

Functional testing FAQ (manual QA)

Is functional testing just basic or beginner testing?

No. Functional testing validates the core systems everything else depends on. When it’s done poorly, teams chase “random” bugs that are actually foundational failures.

Does functional testing only check happy paths?

No. It starts with happy paths, but expands wherever risk appears. Input ownership, Pause/Resume, and state transitions are common failure points.

How is functional testing different from regression testing?

Functional testing validates expected behaviour end-to-end. Regression testing verifies that previously working behaviour still holds after change. They overlap, but they answer different questions.

Is functional testing still relevant if automation exists?

Yes. Automation relies on a correct understanding of expected behaviour. Functional testing establishes that baseline and finds issues automation often misses.

What makes a functional bug report “good”?

Clear steps, a clear expected result, a clear actual result, and short evidence that shows input, timing, and outcome together.

Evidence and case study links

This page stays focused on the testing workflow. The case study links out to the workbook structure, runs, and evidence.