Back to Articles Hub Homepage Portfolio Hub About Me QA Chronicles

Regression testing workflow: the risk-first checks that keep releases stable

An applied QA article, not a definition page and not a case study.

This is an article on my regression testing workflow for manual QA, backed by a real one-week solo pass on Sworn (PC Game Pass, Windows). It exists to show how I design regression scope from change signals, what I verify first (golden path), and how I record evidence that makes both passes and failures credible.

TL;DR

- Workflow shown: risk-first regression scoping → golden-path baseline → targeted probes → evidence-backed results.

- Example context: Sworn on PC Game Pass (Windows) used only as a real-world backing example.

- Build context: tested on the PC Game Pass build

1.01.0.1039. - Scope driver: public SteamDB patch notes used as an external change signal (no platform parity assumed).

- Outputs: a regression matrix with line-by-line outcomes, session timestamps, and bug tickets with evidence.

Regression testing scope: what I verified and why

This article is grounded in a self-directed portfolio regression pass on Sworn using the

PC Game Pass (Windows) build 1.01.0.1039, run in a one-week solo timebox.

Scope was change-driven and risk-based: golden-path stability

(launch → play → quit → relaunch), save/continue integrity, core menus, audio sanity, input handover,

plus side-effect probes suggested by upstream patch notes.

No Steam/Game Pass parity claim is made.

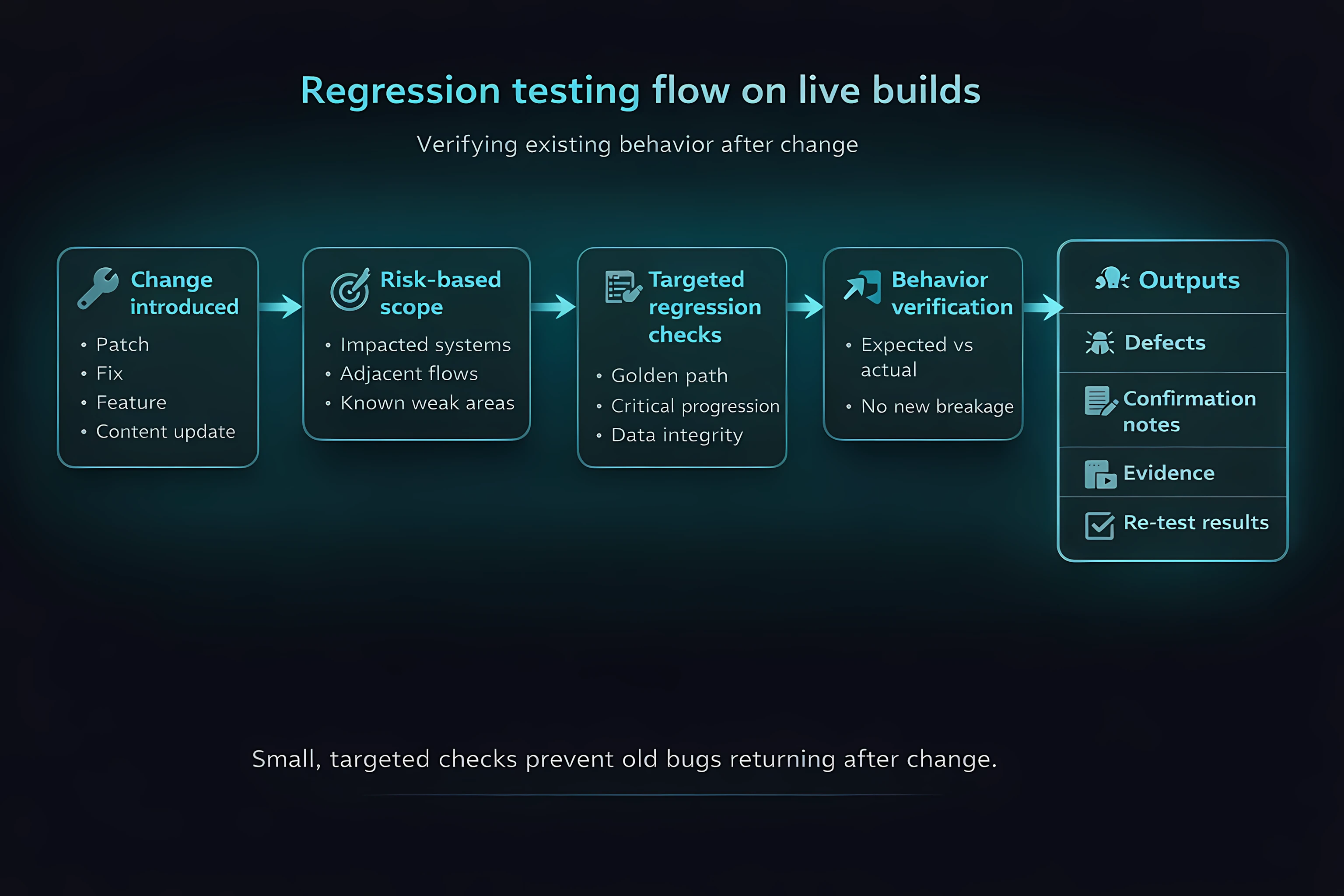

What regression testing is (in practice)

For me, regression testing is simple: after change, does existing behaviour still hold? Not “re-test everything”, and not “run a checklist because that’s what we do”.

A regression pass is selective by design. Coverage is driven by risk: what is most likely to have been impacted, what is most expensive if broken, and what must remain stable for the build to be trusted.

Regression testing outputs: pass/fail results with evidence

Clear outcomes: pass or fail, backed by evidence and repeatable verification. Not opinions. Not vibes.

Golden-path smoke baseline for regression testing

I start every regression cycle with a repeatable “golden-path smoke” because it prevents wasted time. If the baseline is unstable, deeper testing is noise.

In this Sworn pass, the baseline line was BL-SMOKE-01: cold launch → main menu → gameplay → quit to desktop → relaunch → main menu. I also include a quick sanity listen for audio cutouts during this flow.

Why baseline stability matters in regression testing

The golden path includes the most common player actions (launch, play, quit, resume). If those are unstable, you will get cascading failures that masquerade as unrelated defects.

Regression testing scope: change signals and risk

For this project I used SteamDB patch notes as an external oracle: SWORN 1.0 Patch #3 (v1.0.3.1111), 13 Nov 2025. That does not mean I assumed those changes were present on PC Game Pass.

Instead, I used the patch notes as a change signal to decide where to probe for side effects on the Game Pass build. This is especially useful when you have no internal access, no studio data, and no changelog for your platform.

Regression outcomes: pass vs not applicable (with evidence)

SteamDB notes mention a music cutting out fix, so I ran an audio runtime probe (STEA-103-MUSIC) and verified music continuity across combat, pause/unpause, and a level load (pass). SteamDB also mentions a Dialogue Volume slider. On the Game Pass build that control was not present, so the check was recorded as not applicable with evidence of absence (STEA-103-AVOL).

How my regression matrix is structured

My Regression Matrix lines are written to be auditable. Each line includes a direct check, a side-effect check, a clear outcome, and an evidence link. That keeps results reviewable and prevents “I think it’s fine” reporting.

- Baseline smoke: BL-SMOKE-01

- Settings persistence: BL-SET-01

- Save / Continue integrity: BL-SAVE-01

- Post-death flow sanity: BL-DEATH-01

- Audio runtime continuity probe: STEA-103-MUSIC

- Audio settings presence check: STEA-103-AVOL

- Codex / UI navigation sanity: STEA-103-CODEX

- Input handover + hot plug: BL-IO-01

- Alt+Tab sanity: BL-ALT-01

- Enhancement spend + ownership persistence: BL-ECON-01

Save/Continue regression testing: anchors, not vibes

Save and Continue flows are a classic regression risk area because failures can look intermittent. To reduce ambiguity, I verify using anchors.

In this pass (BL-SAVE-01), I anchored: room splash name (Wirral Forest), health bucket (60/60), weapon type (sword), and the start of objective text. I then verified those anchors after: menu Continue and after a full relaunch. Outcome: pass, anchors matched throughout (session S2).

Why anchors make regression results repeatable

“Continue worked” is not useful if someone else cannot verify what you resumed into. Anchors turn “seems fine” into a repeatable verification result.

QA evidence for regression testing: what I capture and why

For regression, evidence matters for passes as much as failures. A pass is still a claim.

- Video clips: show input, timing, and outcome together (ideal for flow and audio checks).

- Screenshots: support UI state, menu presence/absence, and bug clarity.

- Session timestamps: keep verification reviewable without scrubbing long recordings.

- Environment notes: platform, build, input devices, network state (cloud saves enabled).

If the evidence cannot answer what was done, what happened, and what should have happened, it is not evidence.

Regression testing examples from the Sworn pass

Example regression bug: Defeat overlay blocks the Stats screen (SWOR-6)

Bug: [PC][UI][Flow] Defeat overlay blocks Stats; Continue starts a new run (SWOR-6).

Expectation: after Defeat, pressing Continue reveals the full Stats screen in the foreground and waits for player confirmation.

Actual: Defeat stays in the foreground, Stats renders underneath with a loading icon, then a new run starts automatically.

Outcome: you cannot review Stats.

Repro rate: 3/3, observed during progression verification (S2) and reconfirmed in a dedicated re-test (S6).

Patch-note probe example: music continuity check (STEA-103-MUSIC)

SteamDB notes mention a fix for music cutting out, so I ran STEA-103-MUSIC: 10 minutes runtime with combat transitions, plus pause/unpause and a level load. Outcome: pass, music stayed continuous across those transitions (S3).

Evidence-based “not applicable” example: missing Dialogue Volume slider (STEA-103-AVOL)

SteamDB notes mention a Dialogue Volume slider, but on the Game Pass build the Audio menu only showed Master, Music, and SFX. Outcome: not applicable with evidence of absence (STEA-103-AVOL, S4). This avoids inventing parity and keeps the matrix honest.

Accessibility issues logged as a known cluster (no new build to re-test)

On Day 0 (S0), I captured onboarding accessibility issues as a known cluster (B-A11Y-01: SWOR-1, SWOR-2, SWOR-3, SWOR-4). Because there was no newer build during the week, regression re-test was not applicable until a new build exists. This is logged explicitly rather than implied.

Results snapshot (for transparency)

In this backing pass, the matrix recorded: 8 pass, 1 fail, 1 not applicable, plus 1 known accessibility cluster captured on Day 0 with no newer build available for re-test. Counts are included here for context, not as the focus of the article.

Regression testing takeaways (risk, evidence, and verification)

- Regression testing is change-driven verification, not “re-test everything”.

- A repeatable golden-path baseline stops you wasting time on an unstable build.

- External patch notes can be used as a risk signal without assuming platform parity.

- Anchors make progression and resume verification credible and repeatable.

- “Not applicable” is a valid outcome if it is evidenced, not hand-waved.

- Pass results deserve evidence too, because they are still claims.

Regression testing FAQ (manual QA)

Is regression testing just re-testing old bugs?

No. Regression testing verifies that existing behaviour still works after change. It covers previously working systems, whether or not bugs were ever logged against them.

Do you need to re-test everything in regression?

No. Effective regression testing is selective. Scope is driven by change and risk, not by feature count.

How do you scope regression without internal patch notes?

By using external change signals such as public patch notes, previous builds, and observed behaviour as oracles, without assuming platform parity.

What’s the difference between regression and exploratory testing?

Regression testing verifies known behaviour after change. Exploratory testing searches for unknown risk and emergent failure modes. They complement each other but answer different questions.

Is a “pass” result meaningful in regression testing?

Yes. A pass is still a claim. That’s why regression passes should be supported with evidence, not just a checkbox.

When is “not applicable” a valid regression outcome?

When a feature is not present on the build under test and that absence is confirmed with evidence. Logging this explicitly is more honest than assuming parity or skipping the check silently.

Regression testing evidence and case study links

- Sworn regression case study (full artefacts and evidence)

- SteamDB patch notes used as external oracle (SWORN 1.0 Patch #3, v1.0.3.1111)

This page stays focused on the regression workflow. The case study links out to the workbook tabs (Regression Matrix, Sessions Log, Bug Log) and evidence clips.